A Brief Etiology of Bullshit

December 08, 2020

If you’re reading this, I probably don’t have to tell you that Donald Trump et al are lying about election fraud. Like me, you probably knew that Trump had complained of rigged elections in every contest since at least 2012 and laid out in no uncertain terms how he planned to do the same this year, so that when he inevitably began his caps lock polemicizing, you were ready to just kind of tune him out.

You’ve probably been repeatedly reassured by the united front of mainstream media and Twitter fact checkers that there is No Evidence of Fraud. Which, if we’re being honest with ourselves is actually untrue: there is a ton of evidence of election fraud. It’s just that all of it is bullshit. No Credible Evidence of Fraud is more challenging from a messaging standpoint because it requires actually unpacking an analyzing all of the purported claims. Claims such as:

- Dead people voted.

- Illegal aliens voted.

- Sharpies were given to Trump voters in order to void their ballots.

- Boxes were removed from a truck near a ballot counting site.

- Precinct counts violate Benford’s Law.

- Dominion voting machines were programmed to change the results.

- Someone wore a facemask with “BLM” on it near a polling station.

- We were hacked by China, Iran, Russia, Cuba, Venezuela, and/or North Korea.

- And on and on…

The omnibus quality of the conspiracy theory, where every conceivable form of fraud has been alleged, is part of the strategy: just throw out so much stuff that a comprehensive refutation is impossible. And there is a sense that merely engaging with the claims, to take the time to explain the details and lay out the counter-evidence, is to implicitly give them a degree of credibility, to make it seem like this is yet another complex issue with arguments on both sides. And by the time that’s done, whatever was being debunked has already replaced by a new claim. This plays exactly into Donald Trump’s plan: he does not care if his claims can be proved false because his goal is not to win any debate, but merely to have a debate exist.

Nevertheless, I found myself curious to do my own deep dive into some of these claims. There’s such a breadth of subjects and speakers involved: fringe blogs and prime time Fox, blue check pundits retweeting QAnon moms, oddballs plucked from obscurity by Rudy Giuliani to speak before state legislators, and of course, whatever the president of the United States happens to be on about today. I keep wondering: where is this actually all coming from? Is everyone literally just making this stuff up? Is there a kernel of fact somewhere that’s been twisted? Who is designing the narrative, and who really believes? Where are the boundaries between a lie, selective credulity, and simply being wrong?

Following the Thread

The following was retweeted by @realDonaldTrump on November 12 to his 88 million followers, and it caught my attention because of its specificity. It sounded like a pointer to something more tangible than broad claims of MASSIVE RIGGING: someone had done an analysis and produced a quantifiable result.

BREAKING EXCLUSIVE: Analysis of Election Night Data from All States Shows MILLIONS OF VOTES Either Switched from President Trump to Biden or Were Lost -- Using Dominion and Other Systems https://t.co/pBoCNeDL3X via @gatewaypundit

— John McLaughlin (@jmclghln) November 12, 2020

Who did this analysis? How? Was it being accurately represented by this headline? Does any analysis actually exist?

Let’s start at the tweet and work towards the source.

Donald Trump -> John McLaughlin

John McLaughlin is a political strategy and media consultant who claims to have a large number of Republican politicians as clients, including the Trump campaign. Promoting the GOP narrative of the day is literally his job, so needless to say I’m not ready to take his claims at face value.

John McLaughlin -> The Gateway Pundit

The tweet links to an article on The Gateway Pundit. The overall credibility and political leanings of the site is summed up pretty well by the site banner:

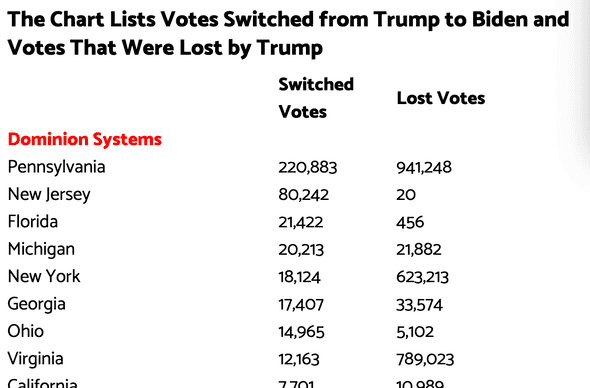

The article summarizes an analysis that Gateway Pundit “obtained” which purports to show totals of votes switched from Trump to Biden, or just outright deleted, with breakdowns by state. In total, 512,095 were switched and 2,865,757 were deleted.

It doesn’t mention how these numbers were derived, but does note that these results are “unaudited”. This caveat does nothing to temper the bold, large font conclusion that

It looks like the Democrats did everything imaginable in their attempt to steal this election. The problem was they never expected President Trump to lead a record breaking campaign and they got caught. More will be exposed.

The Gateway Pundit -> u/TrumanBlack

Within the Gateway Pundit article is a link to a post by a user named TrumanBlack on thedonald.win, which is a Reddit clone that popped up after the r/The_Donald subreddit was banned (yes, this site is as bad as you think it is). This is it, the analysis that Donald Trump was promoting.

The bombastically titled post, “🚨🚨🚨🚨🚨🚨🚨🚨HAPPENING!!! CALLING EVERY PEDE TO BUMP THIS NOW. FULL LIST OF VOTES SWITCHED OR ERASED BY DOMINION!!! AND ALL THE EVIDENCE!!! THIS IS A NUKE🚨🚨🚨🚨🚨🚨🚨🚨🚨”, lays out step by step how they derived the numbers cited in the Gateway Pundit article. I have to hand it to the author: they took care to show their work, use publicly available data sources, and shared their code so that the results are reproducible. By all appearances u/TrumanBlack made a good faith effort to do a real data analysis. This is not something that someone just made up!

So are the results for real?

The Evidence, Examined

Here’s a summary of what u/TrumanBlack did:

First they downloaded data from the New York Times that contains the timeseries of election count updates. I presume this is the same data used to back graphics on nytimes.com. For an example, here is the link to the Pennsylvania data. There’s a lot in here, but the important bit is an array named timeseries whose elements look like this:

{

"vote_shares": {

"trumpd": 0.513,

"bidenj": 0.473

},

"votes": 1474989,

"eevp": 21,

"eevp_source": "edison",

"timestamp": "2020-11-04T03:03:39Z"

},You have the percentage for each candidate, total number of votes tallied, “estimated expected vote percentage” (eevp), eevp source (which is always “edison”), and when the update occurred.

u/TrumanBlack’s original post gave a download link to a python script that does all the data loading and counts the “lost” votes. I uploaded to a gist for easier viewing. It’s not the cleanest code I’ve ever seen, but it’s not too hard to figure out what’s going on:

- Scan the timeseries in order.

- For each element in the timeseries:

- Calculate the number of votes for Trump and Biden by multiplying their vote share by the total vote count.

- Compare each candidate’s current vote to their count from the previous time step.

- If a candidate’s vote count has gone down, then their votes were either deleted and/or switched to the other candidate.

- Print the sum of lost and switched votes across all timeseries steps.

The logic here seems straightforward: as we count votes, they should only add to a candidate’s total, so if their count ever goes down something is amiss. I reproduced the results in a jupyter notebook and spot checked a few examples, and it turns out there are lots of cases where candidates’ vote counts decrease!

Is this a smoking gun that proves that Soros-funded antifa operatives have hacked our nation’s voting systems? Well, no. I see two big problems here, one in the implementation logic and one in the basic premise of the analysis.

Measurement error

Take another look at the sample data above. Vote shares are stored with only three significant digits. If Trump’s vote share is reported as 51.3%, then the actual vote share could be anywhere in the interval [51.25%, 51.35). There’s a measurement error of ±0.05%. This can cause the votes that are calculated by multiplying the vote share by the total vote count to appear to decrease in some cases.

But u/TrumanBlack is no fool and he realizes he needs to account for this, so he adds a check to only count vote decreases that are greater than the ±0.05% margin of error. However this is actually not quite right: the possible error margin for the difference of the vote counts is the sum of each count’s error, so he should be counting only differences in excess of ±0.1%.

I updated the analysis to use the correct margin of error and the vast majority of the “lost” votes disappeared. In Pennsylvania, the number of lost/switched drops from 1,162,131 to 14,892; Michigan from 42,095 to 4,846; and in Florida from 21,878 to zero. Nearly all of this bombshell discovery can be explained away by not properly accounting for measurement error.

The map is not the territory

After accounting for measurement error, there were still a few lost votes coming out of the analysis, which brings me to the second, more fundamental issue: sometimes, your data is just wrong. The numbers in the dataset aren’t the official numbers, rather just numbers that the New York Times is deriving from whatever data feeds they have. This isn’t a direct view into the voting machines. It would not be surprising if there were manual data entry errors which were later corrected, updates posted out of order, or any other number of minor issues that might come up with a live data stream.

Imagine if you were watching Sportcenter and keeping an eye on the scorebox for a Warriors game. You see their score go up to 76 but then a second later drop to 75. What would you think is the more likely explanation? Did bribed referees just illegally deduct a point, or did ESPN accidentally display the wrong number and then correct it? All of this is a long winded way to say: the election day data feed from the New York Times is not the same thing as the vote count itself, and errors in one do not necessarily imply errors in the other.

The Fact Ecosystem

My goal here is not to “debunk” any particular voter fraud theory, but to explore a case study in how facts enter the election fraud discourse.

To my surprise, I found myself with a degree of empathy for the original author, u/TrumanBlack. He is curious, and excited to discover new truths. I can imagine myself doing something like this.

The power of confirmation bias is evident here. In my years working as a data scientist, I’ve found that the ability to gut check results is invaluable. It is common to have errors in the analysis or in the data itself, so it’s important to compare results to expectations and give results with a high surprise factor extra scrutiny. Sometimes you find a mistake to correct, and sometimes you convince yourself that the results are indeed true and update your priors accordingly. In this case, my prior belief was that there was no election fraud, and so I went looking for mistakes. For u/TrumanBlack, he saw what he already knew to be true, that millions of votes had been altered.

It’s not, strictly speaking, a lie to say that “Analysis of Election Night Data from All States Shows MILLIONS OF VOTES Either Switched from President Trump to Biden or Were Lost”. It is a true fact that there exists an analysis that shows this. It’s just that this analysis is by an anonymous user on an obscure internet message board which has been taken at face value with no verification whatsoever by the outlets that are reporting on it. Knowing the origin of information is important for assessing its credibility, but this is obscured by cynical actors laundering the information up the media food chain. The process goes something like this:

- Random internet commentators connect stray pieces of information they find

- Which gets reported by fringe media outlets (“People are saying…“)

- Which gets gets amplified by activists (“Big if true: A new report claims…“)

- Which gets inserted into mainstream discourse by political leaders (Retweets)

With the end result that the president of the United States is using his office to promote a poorly written python script posted to a banned Reddit community, but with enough layers of reference in between to create the impression that these are simply facts that everyone is talking about that are too important to ignore. Now, this fact has been introduced into the discourse, and can be pointed to as one more piece of evidence of fraud.

- Narrative is reinforced, making it incrementally easier to find new conforming data points.

Which closes the loop on the confirmation cycle. When Donald Trump started making noise about rigged elections, it was jump starting this process of grabbing any piece of information that could even hypothetically relate to malfeasance, which then makes it even easier to interpret that next piece of evidence as a smoking gun, in a cascade of conspiracy that makes people increasingly certain and increasingly unmoored from reality, until we end up with people filing affidavits in court that the ballots they saw weren’t creased enough, or whatever.

Layers of reference create the impression of credibility (lots of people are saying it!) and also removes responsibility for the content: I’m not the one making this claim, just pointing at a claim that someone else is making and adding my commentary. Talking about who is “lying” becomes more complicated; in the example discussed here, there isn’t anyone who is literally making things up. I don’t judge the original author of the analysis, but there is plenty blame to for those who promoted it with no concern for its veracity. To reiterate: information traveled from an anonymous message board to the president of the United States, with no one in between pausing to verify the origin or correctness of that information.

Epistemic Divergence

How is it that so many people can look at the claims presented in this election fraud fiasco and see that they are so obviously bullshit, while other people look at them and see a mountain of evidence? For the most part, the most prominent voices aren’t wholesale making up facts out of thin air (at least facts with any specificity, I’m not counting shouting “rigged!” five times a day as a statement of fact). But rather, they are referencing some other dubious primary source, whose origin is obscured by layers of citations, and people accept that fact without drilling down into where it’s really coming from.

The thing is though, this is generally how people, myself included, learn about the world. Consider the following claim that was the subject of a minor scandal this year: “In 2017, Donald Trump paid $750 in federal income tax.” If you saw a tweet about this, which linked to a Business Insider article, which summarized a New York Times investigation, which was based on tax returns they obtained—how far down that chain would you need to go before you accepted it as true? Normal people do not comb through source code or tax returns. A world interpreted only through primary documents and one’s own intellect would be a very narrow one.

So you encounter the following claims: “Donald Trump paid $750 in taxes” and “512,095 votes were switched from Trump to Biden”. Both are promoted by political leaders and news outlets; both are denounced as falsehoods by different political leaders and news outlets. How do you decide what is true and what is false?

I think we do a sort of Bayesian process. To take the first example, I have certain prior beliefs for “Donald Trump is corrupt” (very high belief) and “would the New York Times lie to me” (somewhat low belief). I observe the data point “the New York Times says that Donald Trump paid $750 in taxes”. Based on my priors, I accept this as a true fact, increase my belief “Donald Trump is corrupt”, and slightly decrease my belief “would the New York Times lie to me”.

Similarly, I have priors for “there was massive election fraud” (very low belief) and “would the Gateway Pundit lie to me” (very high belief), and observe the data point “the Gateway Pundit says 2,865,757 votes were deleted.” I judge this as false, increase my belief “would the Gateway Pundit lie to me”, and slightly decrease my belief “there was massive election fraud.”

However a person with the different priors—NYT is fake news, election was rigged—would update their beliefs in the exactly the opposite way, and it would be locally rational for them to do that, given the priors they had. With each data point, we either harden our belief that the speaker is a liar, or expand the capacity of a controversy. In the next round, we’re even more primed to move in different directions, in a self-reinforcing cycle. A cascade of confirmation bias bifurcates us into different realities.[^1]

Trust and credibility matters. If we end up with different sets of gatekeepers, we have not just different viewpoints but entirely different universes of observed facts and reality.

[^1] Curious to think about this more. Is this related to a more general principle of belief formation? How do we bring people back together?